It marks the dawn of a new AI era at Google, according to CEO Sundar Pichai: the Gemini era. Google’s latest large language model, Gemini, initially hinted at the I/O developer conference in June, is now making its public debut. Pichai and Google DeepMind CEO Demis Hassabis portray it as a significant advancement in AI, poised to impact virtually all Google products. Pichai emphasizes the potency of this moment, noting that enhancing one underlying technology can instantaneously benefit multiple products.

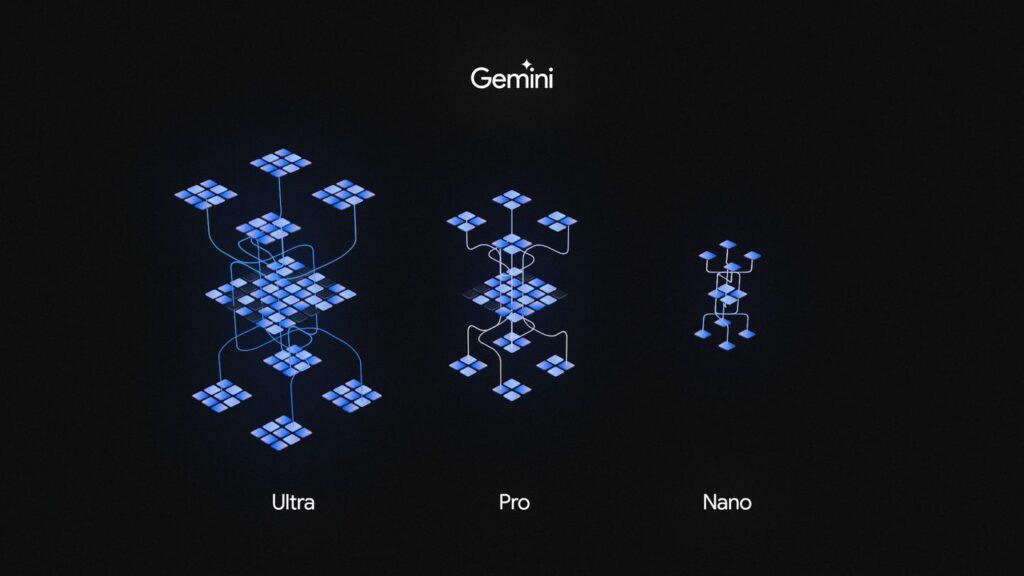

Gemini isn’t a singular AI model; it comprises various versions. Gemini Nano, a lightweight variant, is designed for native offline use on Android devices. The more robust Gemini Pro is set to power numerous Google AI services, serving as the foundation for Bard starting immediately. Additionally, there’s an even more formidable model known as Gemini Ultra, geared towards data centers and enterprise applications, set to debut next year.

Google is rolling out the model in several ways. Bard is now fueled by Gemini Pro, bringing new features to Pixel 8 Pro users through Gemini Nano. Gemini Ultra is slated for release next year. Starting December 13th, developers and enterprise clients can access Gemini Pro through Google Generative AI Studio or Vertex AI in Google Cloud. While currently available only in English, Pichai assures that the model will eventually integrate into Google’s global search engine, ad products, Chrome browser, and more. It signifies the future of Google, arriving precisely when needed.

A year and a week ago, OpenAI launched ChatGPT, catapulting the company and its product to the forefront of the AI landscape. Now, Google, a pioneer in the foundational technology of the current AI surge, and a self-proclaimed “AI-first” organization for nearly a decade, finds itself ready to counteract the influence of ChatGPT. Despite Google’s initial surprise at the rapid success of OpenAI’s technology in dominating the industry, the tech giant is prepared to mount a comeback.

The crucial question emerges OpenAI’s GPT-4 versus Google’s Gemini – the showdown begins. Clearly, a topic that has occupied Google’s thoughts for a considerable time, CEO Demis Hassabis states, “We’ve conducted a thorough analysis of the systems side by side, along with benchmarking.” Google subjected both models to 32 established benchmarks, ranging from comprehensive tests like the Multi-task Language Understanding benchmark to assessments of their ability to generate Python code. Hassabis confidently asserts, “I think we’re substantially ahead on 30 out of 32” of those benchmarks, accompanied by a hint of satisfaction. “Some are narrow, others more extensive,” he adds.

Google says Gemini beats GPT-4 in 30 out of 32 benchmarks.

According to Google

In these benchmarks, where the differences are generally subtle, Gemini’s most distinct advantage arises from its capacity to comprehend and engage with video and audio content. This strategic advantage is intentional, as multimodality has been an integral part of the Gemini plan since its inception. In contrast to OpenAI’s approach of training separate models for images (DALL-E) and voice (Whisper), Google opted to construct a unified multisensory model from the outset. Demis Hassabis emphasizes the company’s longstanding interest in highly versatile systems, particularly in the art of seamlessly integrating various modes. The goal is to amass extensive data from diverse inputs and senses, enabling the model to deliver responses with equal versatility.

Presently, Gemini’s fundamental models operate on a text-in, text-out basis, but the more advanced versions, such as Gemini Ultra, exhibit the capability to handle images, video, and audio. According to Hassabis, this versatility is destined to expand even further. Beyond the current capabilities, Gemini aims to incorporate additional dimensions like action and touch, resembling aspects of robotics. Hassabis envisions a trajectory where Gemini evolves to encompass more senses, enhancing its awareness and precision along the way. Despite acknowledging that these models still exhibit hallucinations and inherent biases, he emphasizes that continuous knowledge accumulation will contribute to their improvement, fostering a better understanding of the world around them.

While benchmarks provide valuable insights, the true measure of Gemini’s prowess lies in the hands of everyday users employing it for brainstorming, information retrieval, coding, and more. Google places particular emphasis on coding, considering it a standout application for Gemini. The introduction of AlphaCode 2, a new code-generation system, attests to this focus, outperforming 85 percent of coding competition participants— a notable advancement from the original AlphaCode’s 50 percent success rate. Sundar Pichai assures users that the model’s impact extends across various tasks, promising an improvement in virtually everything it engages with.

Notably, Google highlights the efficiency of Gemini as a key feature. Trained on Google’s Tensor Processing Units (TPUs), Gemini proves both faster and more cost-effective than its predecessors, such as PaLM. In tandem with the new model, Google introduces the TPU v5p, an updated version of its Tensor Processing Unit system designed for data center use, catering to the training and execution needs of large-scale models.

Cautiously, but optimistically,

Hassabis says

In conversations with Pichai and Hassabis, it’s evident that the launch of Gemini marks both the initiation of a broader project and a significant leap forward in its own right. Gemini is the culmination of Google’s long-term efforts, representing the model the company has been diligently constructing for years — perhaps the one it ideally should have introduced before the global prominence of OpenAI and ChatGPT.

Despite Google’s recognition of the urgency prompted by ChatGPT’s emergence, which led to a declared “code red” and subsequent efforts to catch up, the company remains committed to its “bold and responsible” ethos. Hassabis and Pichai emphasize a measured approach, expressing reluctance to hasten developments simply to match pace, particularly as the field advances toward the ultimate AI goal: artificial general intelligence (AGI). AGI signifies a self-improving AI surpassing human intelligence and holding the potential to reshape the world. Hassabis stresses the need for caution as we approach AGI, acknowledging its dynamic nature, while maintaining an optimistic outlook. “Cautiously, but optimistically,” he concludes.

Google emphasizes that it has invested considerable effort in ensuring the safety and responsibility of Gemini, employing both internal and external testing along with red-teaming. Sundar Pichai underscores the critical importance of data security and reliability, particularly in the realm of enterprise-focused products, where generative AI predominantly thrives. However, Demis Hassabis acknowledges the inherent risks associated with launching an advanced AI system, acknowledging the unforeseeable issues and attack vectors that may emerge. He justifies the necessity of releasing the technology to uncover and learn from these potential challenges.

The release of Gemini Ultra is approached with caution, akin to a controlled beta, featuring a “safer experimentation zone” for Google’s most potent and unrestricted model. Essentially, Google is conducting thorough testing to identify any unanticipated issues before users encounter them, emphasizing a proactive approach to address potential concerns.

For years, Pichai and other Google executives have extolled the transformative potential of AI, with Pichai comparing it to being more impactful on humanity than fire or electricity. While the first generation of the Gemini model may not revolutionize the world, it holds the potential to position Google competitively against OpenAI in the quest to develop exceptional generative AI. The optimism at Google suggests that Gemini marks the inception of a monumental development, potentially surpassing the impact that the web had in making Google a tech giant. Gemini, in this perspective, is seen as a technology with the potential to be even more influential.