The effectiveness of artificial intelligence hinges on computers comprehending cause and effect, a cognitive challenge that even humans grapple with.

In under a decade, computers have achieved remarkable proficiency in tasks such as diagnosing diseases, translating languages, and transcribing speech. They excel in complex strategy games, generate photorealistic images, and offer insightful email responses, outperforming humans in these domains.

Despite these accomplishments, artificial intelligence reveals conspicuous weaknesses. Machine-learning systems can be deceived or confused by novel situations, causing self-driving cars to falter where human drivers excel. Furthermore, retraining AI for a different task often leads to “catastrophic forgetting,” where expertise gained in one area is lost when adapting to another.

These challenges share a common thread: a lack of understanding of causation. AI systems recognize associations between events but struggle to discern the direct causal relationships between them. It’s akin to knowing that the presence of clouds increases the likelihood of rain but not understanding that clouds cause rain.

Comprehending cause and effect, a fundamental aspect of common sense, remains a significant challenge for current AI systems, characterized by what Elias Bareinboim terms as a state of cluelessness. As the director of the newly established Causal Artificial Intelligence Lab at Columbia University, Bareinboim is actively working on addressing this issue by integrating insights from the emerging science of causality, heavily influenced by Turing Award-winning scholar Judea Pearl, who considers Bareinboim his protégé.

According to Bareinboim and Pearl, while current AI excels at recognizing correlations, such as the connection between clouds and rain, this represents the most basic level of causal reasoning. Although effective for driving the surge in deep learning, this method relies on extensive data from familiar situations for accurate predictions.

The consensus is growing that AI progress will plateau unless systems improve their ability to grapple with causation. Understanding causation would enable machines to apply knowledge from one domain to another, reducing the need for constant relearning. Moreover, it would empower machines to employ common sense, instilling trust in their ability to make informed decisions.

Present-day AI struggles to infer the outcomes of specific actions. In reinforcement learning, a technique successful in games like chess and Go, machines learn through trial and error without gaining a general understanding applicable in diverse real-world settings.

An elevated level of causal thinking involves the capacity to reason about why events occur and pose “what if” questions. This advanced form of reasoning is currently beyond the capabilities of existing artificial intelligence, hindering its potential to navigate complex scenarios and make informed decisions.

AI can’t be truly intelligent until it has a rich understanding of cause and effect, which would enable the introspection that is at the core of cognition.

Pearl says

Performing miracles

The aspiration to instill computers with causal reasoning led Elias Bareinboim from Brazil to the United States in 2008, following the completion of his master’s in computer science at the Federal University of Rio de Janeiro. Driven by the desire to delve into this complex field, he seized the opportunity to study under Judea Pearl, a distinguished computer scientist and statistician at UCLA, renowned as a key figure in causal inference.

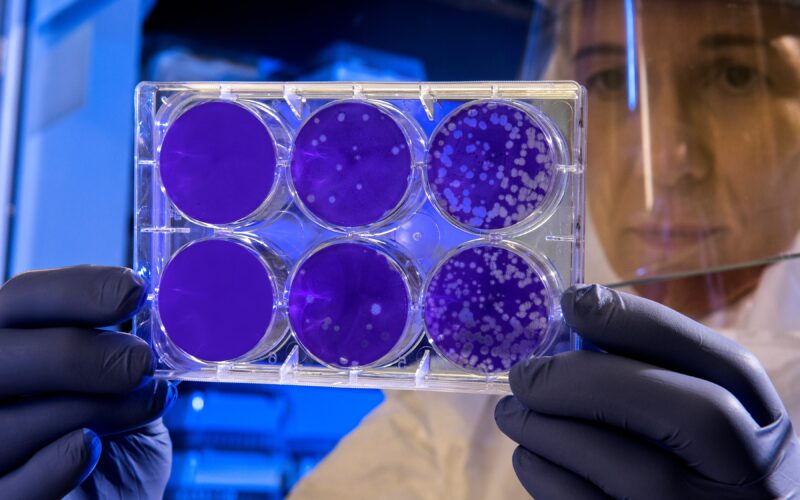

The challenges in creating AI with a profound understanding of causality are exemplified by Pearl’s illustrious career. Even seasoned scientists are susceptible to misinterpreting correlations as indicators of causation or, conversely, hesitating to attribute causation when warranted. In the 1950s, debates among statisticians clouded the link between tobacco and cancer, emphasizing the need for randomized experiments. Pearl’s method, developed over the years, provides a mathematical framework to discern the evidence necessary to support a causal claim.

This approach not only facilitates the identification of factors supporting causation but also elucidates when correlations alone cannot establish causation. Bernhard Schölkopf, a director at Germany’s Max Planck Institute for Intelligent Systems, emphasizes the role of Pearl’s formulas in tackling complex issues. For instance, the ability to predict a country’s birth rate based on its stork population is not due to a direct causal link but rather a shared correlation with economic development.

Pearl’s contributions have equipped statisticians and computer scientists with effective tools to address these challenges. By establishing mathematical approaches to discern causation and refining the understanding of when correlations are indicative of causal relationships, Pearl’s work is pivotal in advancing the field of causal AI.

In conclusion, while AI has achieved remarkable feats, acknowledging its limitations is crucial for advancing the field. Researchers and developers continue to grapple with these challenges, working towards unlocking the full potential of artificial intelligence in addressing complex, real-world problems.